Note-taking tools are not a popular investment track in the traditional sense. Apart from Google Keep, Apple Notes, OneNote, and Evernote, only Notion broke out of the siege.

The remaining are emerging notes such as Obsidian and Logseq in double-stranded volumes.

But the AI notes product "Granola" lives well and was launched in May 2024. It relies on the active communication of entrepreneurs and investors in the technology circle, which has brought about strong user growth. A large number of well-known VC partners use it to record entrepreneur roadshows. Official statistics show that more than half of Granola users hold leadership positions, and founders and executives of unicorn companies such as Vercel, Ramp, Roblox use Granola to record meetings every week.

And recently raised funds. On May 14, Granola announced the completion of a $43 million Series B financing with a valuation of $250 million. On the Notes Track, this valuation is very impressive.

How did Granola differentiate among a number of AI notes products? In an in-depth interview with the famous technology podcast "Colossus", founder Chris Pedregal mentioned that Granola is not just a simple conference transcription tool, its core key is to be "very personal" and give users the ultimate "control". It is a "thinking space" that transcends simple records, can deeply integrate and empower users' workflows. The real value of AI lies in becoming a powerful "thinking tool" of the next generation, enhancing and expanding human capabilities in unprecedented ways.

In this interview, Chris Pedregal also shared many interesting and thought-provoking points:

⚡️ An interesting observation about thinking tools is that these tools can help humans "externalize" the information they need to remember from their brains. Granola is positioned as: it can currently help users generate the highest quality conference notes, but in the future, its goal should be to help humans do almost everything they want to do.

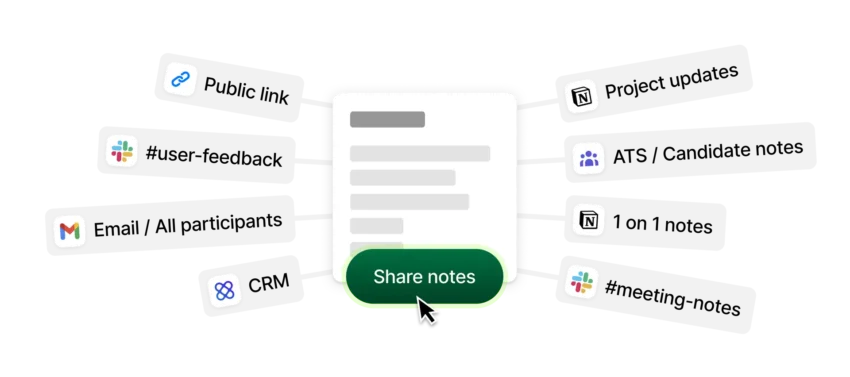

⚡️ Granola’s core is giving users control. Granola is the tool for making users better, which means it is driven by the user, and every decision made by the user is related to it. Most AI applications that generate notes do not let users edit notes, but instead give a "your notes" in the form of PDF or email. Granola's countless tiny decisions revolve around the core philosophy of "let users take control."

⚡️ One of the biggest obstacles to collaborating with AI is the design of the interface. Our interaction with ChatGPT is like the terminal era of early computers, where users enter commands and computers return results. Although this method will not disappear, it will appear outdated and the user feels weak in control. Chris Pedregal believes that we have not found a "steering wheel" that cooperates with AI, and the control is still very rough now, so we take turns to operate - I write something, AI responds, and I will adjust it again. It will be smoother and more collaborative in the future.

⚡️ Granola clearly knew when initially designing a product that when developing a function, should we "development model" or "exploration model"? Because this requires a completely different approach. Should we have a clear idea that only needs to be executed quickly, or is it an open question that needs to be explored first and then found the answer? Before finding the right direction, it is important to protect the ability to change the direction of the product.

⚡️ Nowadays, information and inspiration sources are too scattered, and we often only look at data in an isolated island. I want to have a tool that can dynamically extract the most relevant information from my personal life, context, and human collective wisdom, and present it to me in real time so that I can interpret and utilize it. No one knows what this tool looks like.

01

Tools are a kind of “externalization” of the human brain processing information

Q: “Thinking tools” are valuable wealth given to mankind by technological development. When we first chatted, I was fascinated by the way you thought about how to unlock value for people, and you also mentioned that the x-y coordinate graph is a great example. Can you explain this and share why you are interested in it?

Chris: I think one of the essential characteristics of human beings is that we are tool makers, which sets us apart from other animals. Throughout history, we have invented some tools that have greatly expanded human abilities. Interestingly, some of the tools are designed specifically to assist in thinking. Writing is a typical example, as are various mathematical symbology.

When we are still using Roman numerals for mathematical calculations, the range of processing in the brain is very limited, and once it is exceeded, we must rely on abacus. And the numerical symbol system we use now allows us to easily multiply and divide large numbers. Data visualization, which I particularly like, is a great example. About 200 years ago, William Playfair first presented data in a graphical form, allowing people to understand data visually.

Humans have developed the ability to process and understand images quickly during evolution. Transforming digital information to the visual level allows people to intuitively judge whether the chart is rising or falling, or whether it is rising faster than before, which is simply unimaginable 200 years ago. What I want to emphasize is that from mathematical symbols, writing, to data visualization, to computers, and to now AI, we are entering a whole new stage. At this stage, the power and utility of thinking tools will grow exponentially. While we can’t predict exactly what it will look like in 10 or 20 years, I can be sure that the future will be very different from today.

Q: How do you personally think about the new tools that may be created by this new technology of AI? What first comes to mind when you first come across large language models?

Chris: I have an interesting observation about thinking tools: These tools often help you “externalize” the information you need to remember from your brain. One of the most common thinking tools at present is notepad and pen. When you write something on your notepad, you don’t have to force yourself to remember all the details, you can check these ideas or notes at any time.

For example, this is like expanding your memory. The physical limitations of our brain determine its memory capacity, and these tools actually provide you with extra storage space. I think the amazing thing about big language models is that they can dynamically provide information that is highly relevant to the current situation when you need it, and that information is generated in real time based on the specific needs of the time.

If recording ideas by taking notes in a meeting can significantly improve your efficiency and performance, then imagine what would it be if a computer could provide you with all the relevant background information in the moment to make you perform better in the meeting? Since large language models can instantly reshape and refine information, I believe this will greatly enhance the individual’s abilities.

Q: How is this implemented? Does it mean that everything in my life, like the books I have read, the conversations I have participated in, is eventually stored, and then feeds back my current situation to the system through some mechanism, which can provide ideas or brainstorm suggestions?

Chris: Before we discuss the specific functions of Granola, I would like to explain one thing first: in the field of AI, it is relatively easy to predict one or two steps in the future, but it is very difficult to see what will happen ten or twenty years later.

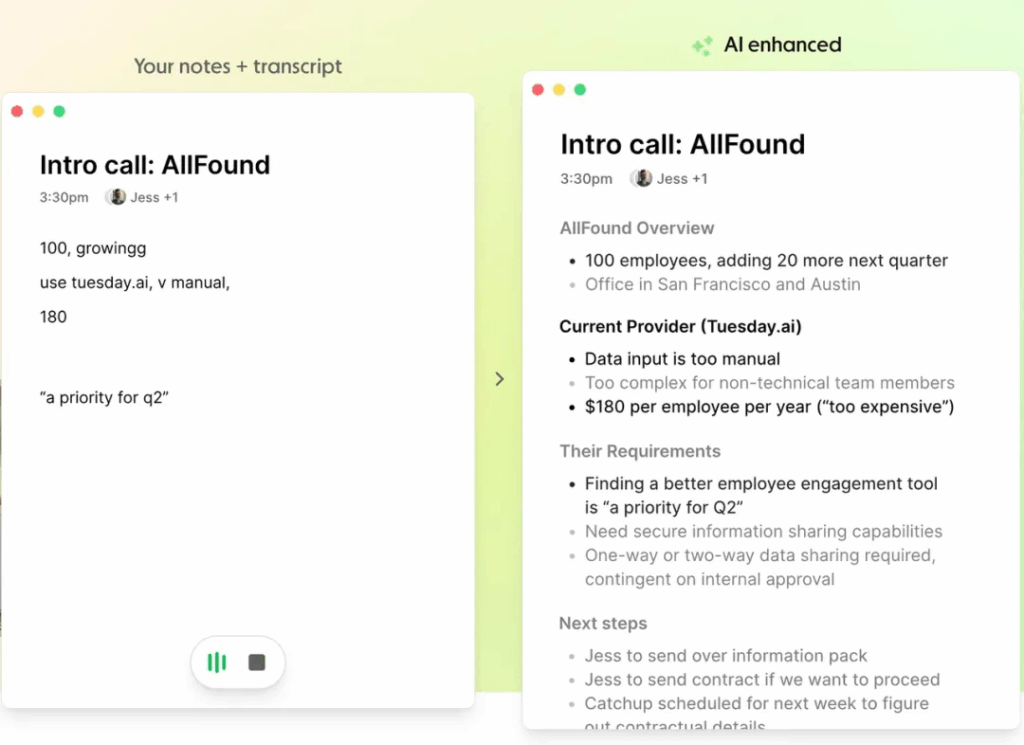

Think of Granola as a digital notebook. Just like the Apple Notes app on your computer, you can take notes on it. But the most notable difference between Granola is that it also “listens” to what you are discussing.

For example, in a meeting, you can record any notes or flashing ideas at any time, while Granola captures the conversation in real time and transcribes it. After the meeting, it will further improve and enrich the transcription based on the notes you recorded, making the final notes more complete and valuable. In this way, you don’t need to record everything in the meeting in detail, but instead focus on those key insights or your unique thinking and judgments, and leave the tedious recording work to AI to complete.

What are the advantages of doing this? We may not yet fully understand how it will completely change the way people work, but I am sure it is bringing changes because the way I work has changed as a result, as are many of Granola’s users. We may have achieved only 5% of our vision at the moment. But when you look back at the meeting notes, you can get the full background information of the meeting.

You can even interact directly with Granola, ask what was discussed in the meeting, or extract the topic of the meeting. We also have an internal feature that has not been officially released yet, which allows you to view all historical meeting records related to a particular person or topic and integrate and extract common topics or information across meetings from them. These background information that could have been easily lost or forgotten—maybe you once recorded it in a notebook but can’t find it, or you don’t rummage at all when you need to make relevant decisions—now they are within reach and available instantly.

02

Granola makes efficient use of context windows

Q: What changes has Granola made to the way you work? As the person who uses it the deepest, what changes have you actually changed?

Chris: I think this shift in working style will become more and more common. For knowledge workers like you and me, we always think about “what background information do I need to master at this moment to most effectively play my intellectual strengths?” For those using ChatGPT or other large language models, they will be familiar with the concept of “context window” – you can enter a certain amount of information, which is equivalent to telling the model “this is the current situation, and these are the background knowledge you need to know.”

In fact, this way of thinking also applies to us humans, and this is how we have always thought about it. For example, when I need to write a blog post, I used to pick up a notebook, quickly write down some scattered ideas, and try to organize them into text. Now I will first communicate with a few experienced friends, who have given me a lot of valuable suggestions. I use Granola to record these conversations so that I have both my handwritten notes and complete conversation transcription.

Next, I will brainstorm the recording function of Granola (which may refer to the interactive function under the transcription content here), then organize the relevant materials into a Granola folder and interact with AI to let it help me refine the overall architecture of the topic or suggestion article. Ultimately, the blog post was written by myself, but the process allowed me to integrate all the suggestions I received in a very efficient and surprising way. I can say with certainty that without this tool, there is a good chance that I would have missed something important. This is the first example.

Another example is the behavioral pattern changes we observed in Granola users, who take notes in a very different way than before. Those users who use Granola in depth only record very few notes in a meeting, which are usually some of their own instant thoughts or judgments, rather than what already contains in the conversation transcript. For example, they might write down “This person seems a little aggressive”, “They don’t look too high”, or “I have some concerns about this field, and they don’t really answer my questions” – these are the most critical and personal judgments. All other conversations can be handed over to the AI for transcription with confidence.

When they look back at the notes in Granola, they often don’t read the full text word by word, but ask questions directly to the AI with specific questions or information they want to find, so as to quickly obtain high-quality answers. This method is much more efficient.

Q: Can you talk about the vision from 5% to 100%? You mentioned that large language models can only predict a few steps, but after looking forward to two or three steps, where do you think they will go next?

Chris: At the end of the day, the core of the question is “What information do I need to make the most informed decision at this critical moment?” Imagine a scenario where if you are a CEO, your team will prepare you a detailed background package with all the necessary information that is relevant before a crucial and extremely risky negotiation. I believe that in the future, everyone will be able to get such a customized “information package” in real time before attending every meeting.

The interesting question is, what background information is considered to be truly useful? Is it limited to the content of the last meeting? Or all your emails? Or is it information from the whole world? At the same time, what will the user interface look like? My positioning for Granola is: it can help you generate the highest quality meeting notes at the moment, but in the future, its goal should be to help you do almost everything you want to do.

For example, if you end the meeting and walk out of the room, you may need to immediately write a follow-up email for the meeting, an investment memo, or organize a multi-partner event. Granola or similar tools, after mastering all the necessary background information, should be able to help you complete 80%, 90% or even 95% of the work. Both Granola team and I emphasize one philosophy: we believe that the core value of AI is to empower humans and make users stronger. Of course, we can also use AI to replace a person or a specific task, but we tend to use it to enhance human abilities, improve intelligence and performance. We firmly believe that tools should be used to help humans do more, realize more, and think more.

Everything we do is centered around this core concept: Granola can help you with tedious things like writing follow-up emails, but you need to add your own thinking and judgment to this – this is the real value and the key to whether you can convince others. You just need to modify and improve it slightly based on AI generation, rather than be bothered by all the details.

03

Granola’s Product Philosophy:

Grant users control

Q: Do you have a very clear product philosophy to guide your decision-making in daily life?

Chris: My personal approach is that most excellent product thinking and design boils down to one simple question: When using a product, when staring at it, ask yourself “How does this make me feel?” Ask this question repeatedly and listen carefully to the answer. Then give the same product, interface or button to another person and ask them the same question.

After doing this a hundred times, you will find that within the first 500 milliseconds of seeing the product, you will feel ten emotions. These emotions are fleeting, but they often tell you that “too complicated”, “too messy”, “I don’t know what to do”, “this makes me uneasy”. If there is an emotional recorder that can slow down these reactions, it can tell you how to make the product better. Of course there are other important factors, but this question is a wonderful guide.

Q: What I just talked about is your personal philosophy. So for Granola, is there a set of its own unique product philosophy? Is there anything different from your personal philosophy?

Chris: Granola’s core is to give users control. Granola is the tool to make you better, which means you drive it, and every decision we make is relevant. Even the most basic design, it is an editor.

Most AI apps that generate notes won’t let you edit notes, they give you a “your notes” in PDF or email. Our countless small decisions revolve around the core philosophy of putting users in control.

Q: What is your user base? Is there anything that surprises you? What are their main types of occupations? Is there any focus on a specific industry? What have you learned from actual user data?

Chris: It’s interesting, our users are people who tend to use AI, and they embrace new tools and new ways of working. This corresponds to many founders, investors, and people working interdisciplinary in the field of AI. The proportion of marketers using Granola in AI startups is very high. There is a clear dividing line between those who are willing to try these tools and those who are unwilling to try them.

Q: This sounds very reasonable, as Geoffrey Moore mentioned in his book Crossing the Garrison, there will always be a group of “natural early adopters”.

Chris: I remember a strange thing. We released Granola last May, eight or nine months ago. I’ve been making products for a long time, but this time it’s very “surreal”. Some people tweeted when the product was first released, and we were very happy and didn’t expect it to be a big hit. A few weeks later, some famous CEOs we didn’t know started tweeting and then sent me a private message on Twitter to give me product feedback. Granola resonates with a certain type of people who are active on social media. My Twitter private messages have basically become a customer service channel for CEOs of big technology companies. It’s a weird experience.

Q: I also contacted you in this way, which is indeed an interesting way to get to know people quickly. Back to product construction, is there any important node or decision that may have been a challenge at the time, but in hindsight it was a surprise turning point?

Chris: At least for Granola, we decided early on to make it a Mac app, rather than a bot or web tool that joins the conference. There are many ways to implement it, and this decision brings a lot of trouble. For example, initially, Granola could only run on macOS 13, and at that time only 15% of Mac users used this version.

The reason we do this is to make it like a laptop and a pencil, whether you can pick up Granola at any time on Zoom, face to face or Slack Huddle (an audio-only conferencing feature on the Slack platform), without thinking too much. An important feature of a tool is reliability and consistency, allowing you to know how to use it.

Making Mac applications brings many unexpected benefits, which are on your computer, more instant, more controllable and easier to access. The intimacy of people using Granola is largely because it is a desktop app, not a web page hidden in the 50 tags of the browser. We might have boasted a little bit, but the decision was much better than we realized at the time.

Q: Are there any major problems or directions in this process that made you change your original view later?

Chris: Yes. When we initially designed Granola, the interaction mode was completely different. The first version of our experiment is that you enter one or two keywords in real time in the meeting, press the Tab key, and Granola will write the full notes in real time. The demo is cool and a little magical to use.

We spent six months trying to make this feature perfect, but failed. We found that no matter how well the notes are written, real-time generation will make people unable to help reading them, and the result is very distracting.

Granola’s original intention was to make you focus more on meetings, but it backfires, people stared at the notes, changed if they weren’t satisfied, and then found that they didn’t listen to people, which was too bad. So we completely changed to a more ordinary mode: in the meeting it was a normal text editor, like notepad, and the “magic moment” happened after the end.

This means that Granola’s value will not appear after a meeting is used up, which is not ideal. When building a product, you want the “magic moment” to appear in the first 20 seconds. But this makes the product much better. We spent six months walking the wrong path until we finally accepted that there was a better way.

04

AI Conference Tools that Don’t Save Audio

Q: I’m curious about some specific problems you encountered during the development process, such as recording. I feel more and more people will ask for a recording session, and I was uncomfortable at first, but now it seems to be normal. Do you think we’ll get to the point where everything is recorded by default? Can you explain your method? At the same time, how do you think the future will develop?

Chris: I think as a society as a whole, we really need to seriously weigh the pros and cons. I believe in a few years that many people will feel very inconvenient if they don’t use tools like Granola when they have meetings or work exchanges, because the functions of these tools will become extremely powerful and practical.

However, as you pointed out, this does involve a trade-off in terms of privacy. We need to find a reasonable balance between maximizing the practical value of tools and minimizing violations of personal privacy. As for where this line will eventually be drawn, I cannot be sure at the moment. When we initially developed Granola, we decided to not record or store any audio data after careful consideration. Although Granola listens to audio in real time and transcribes, the original audio file itself is not saved.

Many people are puzzled and even mocked about this, asking us why we don’t save audio. Is audio recording unimportant or useless? Of course it is useful! Being able to listen to someone’s original words and understand their tone will indeed lose the value that some users could have obtained. But that also means that Granola is far more secure than AI robots that will join your meetings and do audio and video recording and storage. Those robots will store audio and video content, and who knows how long this data will be retained in the end?

In my opinion, Granola’s experience in this area is completely different. It can generate high-quality conference notes and provide very useful transcription text, but it has a much lower “invasiveness”. I think the real question is how it will be when we apply such tools to the real world, especially outside of the work scenario. In environments like Zoom meetings with clear purpose and expectations, recordings are often understood and accepted. But in social life, I believe that their behavioral norms will be completely different. I personally don’t know the specific direction of the future, but in the work scenario, most people hope to capture this information because AI brings huge value to users. And in social situations, this is likely to cause controversy.

Do you remember how strong the public rebound was triggered when Google Glass was first launched because it might have recorded others without knowing it? I can completely imagine that when AI wearables or “pendants” become popular, similar situations may occur again. If someone wears this kind of equipment at a party, it is likely to cause disgust among others present.

Q: How long do you think it will take to wait for offline meetings to be the same as online Zoom. Everyone has similar expectations for recording by default? I’ve really wanted to get to this state.

Chris: Our iOS version is coming soon (already available). My co-founder Sam Stephenson and I first developed this tool entirely because we had this demand ourselves. To be honest, we didn’t expect it to be so widely welcome. Once people start to get used to using Granola to record important work exchanges, you are actually “outsourcing” some of your personal memory abilities, and you will naturally expect to be able to review the key information in any conversation at any time and easily.

We have received emails from users who are very eager to say “angry”, describing the situation you just mentioned: “One-third of the meetings I attended are face to face, and it feels like fighting empty-handed, and I am very eager to use Granola on site.” For the Granola we are building, I believe that such auxiliary tools will eventually become popular and used by everyone.

As for how social norms will evolve, I personally do not tend toward the future where all communication is secretly recorded, although I know this is a potential development direction for some people in Silicon Valley.

I personally think that in the workplace, just using a mobile phone as a tool is enough. When you put your phone on the desk, it is easy to form a tacit “social contract” between the participants, and everyone knows what is going on. This is how we work inside Granola: at every meeting, it’s clear whether there are phones taking notes and whose phones are. I think this transparent social contract is very important, and individuals need to consciously maintain it like other matters in their management work. If you can honestly put your phone on your desktop, everyone can benefit from it. I think this behavioral pattern changes may come faster than you expected in a work scenario; in social settings, the situation will be completely different.

05

The biggest obstacle to AI application:

No “steering wheel” found to collaborate with AI

Q: Judging from your experience, does a key revelation require better construction of context collection tools? You’re doing this for “dialogue”, what about the other contexts? Can you imagine the function point of “context collection”?

Chris: Collecting context—geting all the data—is not really difficult. It won’t take long to enter all emails, notes, company documents, and tweets into Anthropic or ChatGPT. But the other question is, which of these data is really related to what I am going to do right now? This may be a technical issue or a user interface issue.

I think one of the biggest obstacles to collaborating with AI is the design of the interface. Our interaction with ChatGPT is like the terminal era of early computers, where you type commands and the computer returns the result. Although this method will not disappear, it will appear outdated and the user feels weak in control.

I’ve checked an analogy. The earliest cars had no steering wheel, only one joystick, which could rotate left and right. It’s okay if you drive very slowly, but when the speed is fast, the pole will be useless. If you move a little longer, you may break out of the way, and there are great safety issues. Later, someone invented the steering wheel, which gave the driver more refined control. I think we haven’t found a “steering wheel” that collaborates with AI. The control is still very rough now, and it’s a turn-by-step operation – I write something, AI responds, and I’ll adjust it again. It will be smoother and more collaborative in the future.

Q: Can you explain in detail what this smoother interaction will look like? What is the difference between it and how we operate it back and forth now?

Chris: Depend on the tool. But now it feels like AI and I don’t work on the same canvas, but are side by side on two canvases. A very basic thing is that in ChatGPT or Claude, you can’t edit AI’s answers directly. You can’t go in and say “This is stupid, change the language.” You have to command it “Please shorten a little” and hope it will be rewrite the way you want it to. It will look crazy soon.

There is a historical analogy that these inventions will appear very obvious. Early text editors had the concept of “mode”, such as insert mode, where you go in and write, then exit, and then enter delete mode or copy mode. Larry Tesler launched a change that made these operations smooth without switching modes, which was unimaginable at the time.

The future of AI is the same, it is difficult to predict the specific appearance, but I promise it will be completely different from now. The granularity and collaboration speed of control will be greatly improved, and the experience will be smoother.

Note: Larry Tesler, a member of the Stanford Artificial Intelligence Laboratory, mainly researched in human-computer interaction. He is known for inventing and naming the “cut, copy, paste” commands and the “find & replace” functions that are widely used in modern computers.

Q: Are there any users who use Granola to make you feel particularly surprised or illuminated?

Chris: There are a few things that impressed me. First, the diversity of usage scenarios. We designed for work conferences, but soon someone said, “My partner has cancer and we have to hold many meetings with doctors. Granola is extremely valuable in the process, and I don’t know how to deal with it before.” This is an unexpected use case.

There are also people who creatively input more context into Granola, not a feature we designed. For example, someone would have a Granola “meeting” and brainstorm to himself, or plan a day, say what to do, and ask Granola to help prioritize. Some people also watched YouTube videos and took notes while studying. This is the biggest surprise.

Another behavioral change is that people are less and less reading notes directly, but more asking about the information they are looking for through Granola’s chat feature.

Q: How does Granola evaluate the model specifically? In addition, will you switch and use the best performing model at any time?

Chris: Totally correct. Evaluating model capabilities is not simple, but what you describe is what we do. Instead of using only one model, we use multiple models inside Granola, combined in different ways, and we will switch to the best model of the day at any time.

Q: What do you think about the competition between your products and users using basic models directly? Just like when I asked a large company if it would do it by itself, now everyone is asking whether the model company will do applications like yours. When designing products, why do you consider not being snatched by model companies?

Chris: I don’t have a “crystal ball” and cannot predict the future, but my opinion is that there are two key axes: one is the frequency of use, do I use it once or twice a month or 500 times a day? Second, how good do I need to be in this matter?

Low-frequency and not requiring too good tasks will be eaten by the general purpose system. Most consumer use cases fall into this category because low-frequency use cases are difficult to develop the habit of using new tools. If you only need “okay” effects, a universal assistant like Claude is enough, and the more you use it, the better it will be for you.

The other end is the use cases that are high-frequency and require excellent performance, which is the “professional tool” field, and there will always be people who need top-notch tools. Granola belongs here. You may ask, “If the model becomes smart enough, won’t it be fully covered?” My answer is, this is not a question of intelligence, but the degree to which the user interface optimizes this use case. If a product focuses on a use case and does it to the extreme, its experience will be better than a general-purpose tool. The limiting factor lies in the optimization of product design and user experience, not the underlying technology.

06

Before finding the right direction,

The ability to protect the product’s “change direction” is important

Q: If model providers and application developers are regarded as competitive levels, how do you enhance Granola’s user stickiness and value through product architecture? How to ensure that users will not easily turn to competitors with better performance? Of course, you are still in the early stages and are mainly focusing on making good products, but have you started to consider this aspect?

Chris: The only answer in this field is that you have to create something better than others. There are switching costs and small “moats”, but the only way to win is to continue to produce better products than others.

A product like Granola has an inherent switching cost because the more context it holds, the more useful it will be for you. Other products are much better than Granola before users can give up. But I think if you are lazy for three months, you will be in trouble.

Q: How did you and the team iterate quickly? As an AI application company, what are your thoughts and practices in this regard? Is there a way that works or fails?

Chris: We know very clearly whether we are “development mode” or “exploration mode” when developing a feature, because this requires a completely different approach. Is there a clear idea that only needs to be executed quickly, or is it an open question that needs to be explored first and then found the answer?

If we know what to build, our experience is a common suggestion: build an MVP as soon as possible, give yourself a deadline, and push it to real users – not necessarily everyone, and then try to speed up the iteration.

We had trouble in the past because we didn’t understand the model and applied the philosophy of “delivery as soon as possible” to open the issue. As a result, we rushed to push a bad product to users, thinking that I was “made up for two weeks, which is great”, but in fact, the problem was not solved. Released, but did not solve user needs.

It is especially important in this area because the pressure makes you have to be fast-paced, but it is really critical to spend more time occasionally thinking about how to do it well. For example, it took us a year to launch Granola, which is already seven years behind the AI notes field. If we publicly launch the initial interactive model, we cannot change it. Users will learn new behaviors, and those who stay will like them, but the retention rate will not be high, so that’s all. Before finding the right direction, it is important to protect the ability to change the direction of the product. Balance this in the fast-paced realm is a challenge.

Q: Are your ambitions or goals adjusting with the development of the product? By 1 to 10, how many points are you giving yourself now? Compared with the beginning, has the score increased?

Chris: I ask myself every day, are we doing the right thing? Sam Stephenson, while working with me on and testing large language models, found that all the working tools were rebuilt or reshape.

We think there will be a new software category, but it doesn’t have a name yet. People like you and me who rely on interpersonal communication, projects, and conference work will use it all day long. This is what we wanted to build on the first day, and so is now. If you are not OpenAI or Anthropic, you must be particularly good at a certain use case at the moment and cannot just build great products of the future. Every step must be super useful to the user.

You have to think clearly: how much time do you spend building the next five obviously useful things, or do you have a bold move? We wanted Granola to go from a note-taking tool to a tool for people to do most of their work. If you are writing a document or memo, it should be easier in Granola because it knows all the relevant backgrounds you work. But this is a big step, and doing it well requires a lot of work and iteration.

Q: Imagine that in the future, there will be companies that will do better than Granola. If you are their VP, what major market impacts will you worry about?

Chris: I think you can worry about a lot of things, but you have to take care of them because you can’t control many things. What we chose at Granola is the competitors who have not released their products yet. The kind of startups that see what we and others figure out, start from that starting point, execute faster than we do.

I was surprised by the super fast reaction of the tech giants. After ChatGPT became popular, every big company adjusted its strategy and I was impressed by their leadership. But making a decision does not mean that you can do it well. An investor from ours once said, list the AI functions you use every day, how many are made by large companies, and how many are made by startups? Surprisingly, startups account for a lot, despite the huge investments in building these AI capabilities by big companies.

Will this change over time? Maybe. Startups are often the R&D departments of large companies, and once they understand the things, they can integrate into a large user base. But companies belonging to this generation will understand the key earlier and then become huge.

07

In AI application companies,

Small teams can do big things

Q: I am particularly interested in a phenomenon recently, which is the kind of “small teams can also make big products” in the AI application field. For some very powerful AI tools, the team size may be less than 25 people, but even if users and revenue are growing rapidly, the team number has not expanded significantly. Is there any different experience in building a company in such a field compared to the companies you worked in before the emergence of AI?

Chris: I think there are two very prominent features in the current field of AI: the first is that the technology is developing surprisingly fast; the second is that for application-layer products like Granola that are built on large language models, we can greatly benefit from the rapid progress of underlying technologies. We have invested a lot of energy to think about how to create an ultimate, end-to-end excellent experience for our users. Without these powerful technical foundations at the bottom, we may need a much larger team to achieve current functions and effects.

Even so, Granola’s ability to stand out is largely due to our focus on technical details and edge cases. Some problems may not usually be something you wouldn’t think of, such as if the user took off the AirPods headset midway through the meeting, or in a Zoom meeting with multiple audio channels, Granola needs some special treatment to ensure smooth and seamless user experience. We are using AI tools in our internal work as much as possible, but it has to be said that some of the relevant development tools are not mature enough at present.

We are still a bit far from fully implementing end-to-end automation, so we still need to invest a lot of manpower and work. I personally don’t like to predict specific time points, because in this field, it is almost impossible to make long-term predictions. But if we fast forward three years, I think the way we work and the types of tasks that can be outsourced to AI will change drastically.

Q: The challenges in this area are mainly focused on the engineering level, right? Have you ever imagined the future of introducing Cognition, Cursor, or other tools, whereby teams can be like managers rather than engineers, just telling the system what to do without designing the endpoints themselves?

Chris: That’s right, it’s true. One goal set by our CTO is to minimize the number of lines of code that each engineer on the Granola team needs to write. We recently organized an interesting team event with the theme of “Using AI in any scenario you would never expect, challenging our inherent thinking and comfort zones.” I have a great example to share: I was in Spain at the time and wanted to grill shrimp for the team. Although I bought the shrimp, I had never grilled it before. I directly typed “How to Grill Shrimp” in ChatGPT, and the CTO told me: “No, you have to give it more accurate and richer context information.”

He asked me to take pictures of the grill and shrimp and upload it to the AI and it turned out he was right. We found that the shrimps were actually precooked and we didn’t understand the Spanish on the packaging bag at that time, so we didn’t need to “grill” at all, we just needed to heat it a little. If I just simply enter text to ask questions, it is absolutely impossible to discover this key information. This example is a good example. To make full use of these new tools, you need to establish a new set of intuition and thinking patterns, just like when the Internet first appeared, the previous generation would not naturally think of Google search, but the younger generation was used to it.

I believe that users of the “AI native” will have a very natural understanding of what context should be provided to AI and how to collaborate with AI more effectively. When they encounter uncertainty, they may instinctively provide more background information to see what results AI will give, rather than asking questions directly. I am 38 years old and think I’m sensitive to these new technologies and think about them every day, but even for me, the team sometimes reminds me that I’m not using AI enough frequency and depth. If even I were like this, then the situation of the general public could be imagined.

08

Don’t outsource your thoughts to AI

Q: Put aside all the current technology limitations, and boldly imagine what do you think the “thinking tools” we use will evolve into in the next five or ten years?

Chris: I want them to be more human and to be tools to make people better. It is mainly to unlock our creativity and unleash amazing abilities unique to human beings. People who build AI tools must consciously work in this direction, because there is one direction here: you want to outsource all the repetitive, boring, brainless work, but don’t want to outsource judgment.

You mentioned generating ideas and letting AI give 100 ideas and you pick the right one, which is great. But the danger is that everyone does this and ends up being the only way to choose the AI idea. For example, “Writing is thinking”. If AI writes on its entire process, some are worthless repetitive labor, but some are part of your thinking. If you accidentally outsource these, there are risks.

Nowadays, information and inspiration sources are too scattered, and we often only look at data in an isolated island. I want to have a tool that can dynamically extract the most relevant information from my personal life, context, and human collective wisdom, and present it to me in real time so that I can interpret and utilize it. No one knows what this tool looks like.

Q: I’ve seen a friend give a particularly cool demonstration before: he connects the microphone to a tool similar to Midjourney, which can generate and project images related to the conversation content in real time at a rate of five or eight frames per second when you speak. He used this to make some creative experiences. But as you said, imagine what this application will be like in a work scenario? While helping you “externalize” the thinking process, it can also give you some information or inspirational ideas that you cannot imagine. But it is actually very difficult to do it so useful and not distract you at all.

Chris: There are many wonderful ideas in science fiction, but they don’t work in practice due to trivial tactical reasons, just like writing notes in real time can be distracting. Human experience defines “what works.”

Q: Let’s talk about investors. You have raised funds from very good investors and must have talked to many people. Nowadays, investors in the technology circle and private equity markets are basically focusing on the AI wave. They are particularly interested in knowing how to better interact with founders and these new AI application companies and invest money in the most potential and efficient places. Do you have any suggestions for these investors? For example, you can share what are the best and worst investors you have worked with, and what have they done?

Chris: I’m not an investor, so it’s hard to give advice. What I can say is attractive to me. I mentioned that when creating new functions, we should divide them into “development” and “exploration” modes. AI is an exploration problem as a whole, and no one knows the correct answer.

The basic model may enter the development mode, but the application layer is completely exploration. This requires specific sensitivity. I think it is product-centered and thinking deeply about what is a good product for users. There are not many investors who can talk to me about this.

What makes me notice is the ability to write specific insights about product behavior or space in a link-making email, where Granola did it right or wrong. I want to find partners who work together for a long time, share the world view and ways to solve problems. Specific execution will change, but are there any similar thinking frameworks? This is what my investors and I have. They are excellent product thinkers who can interact at multiple levels.

Q: Do you think a successful AI tool can have no advantage of relying on data? For example, it is not like some companies that have unique data, nor does it accumulate first-party data like you do. Can AI applications without data barriers still succeed? Is data the key to determining product sustainability and advantages?

Chris: The data demand is not that big now, and it is neither expensive nor difficult to obtain a little data. The trend is that basic models can understand the world, do a lot of things, and add a little data to optimize for use cases. In the past, machine learning required millions of samples, but now 50,000 is enough. Even with more expensive data, 50,000 is not difficult.

I’m thinking about what data I can’t get. I think everyone will be able to build applications soon, but the impact on the world is still unclear. I think of photography examples. In the early days, you could use cameras, and you surpassed many people because cameras were very expensive and had high learning costs. Now everyone has a mobile phone in their pockets, and everyone is a photographer, who can take amazing things. But at the same time, the premium to “taste” is higher. If you are really good and stand out, it may be more valuable.

Q: The last question, why did you choose to do Granola?

Chris: I am happiest when I create something I believe in and think is important, otherwise I will not be happy. That’s what I am.

Author:Founder Park

Source:https://mp.weixin.qq.com/s/xu4W7jhQlyalLXWdTmfcXQ

The copyright belongs to the author. For commercial reprints, please contact the author for authorization. For non-commercial reprints, please indicate the source.